The Model Context Protocol (MCP) is a big deal in artificial intelligence. It was introduced on November 25th, 2024, and it’s like a universal connector for AI systems. Before MCP, AI assistants were like chefs with only one ingredient — their own capabilities. But now, with MCP, AI assistants have a “fully stocked pantry” of information to work with. This means they can do more and better things for us.

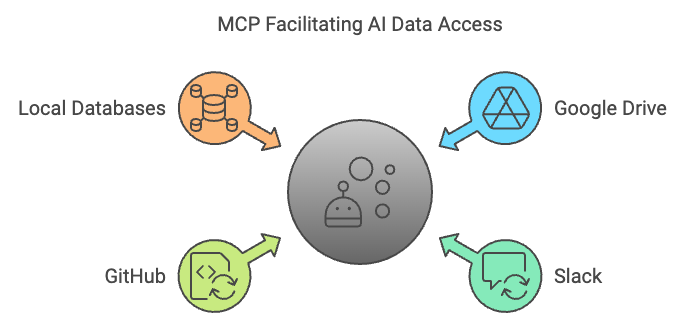

MCP works by connecting AI systems to different data sources, like Google Drive, Slack, GitHub, and even local databases. This makes it easier for AI to access and use the information it needs to do its job. For example, if you ask your AI assistant to analyze sales data from a local database, the assistant sends a request to the MCP server, which gets the data and sends it back to the assistant. It’s like ordering takeout — the AI assistant places the order, the MCP server delivers the data, and the database is the restaurant.

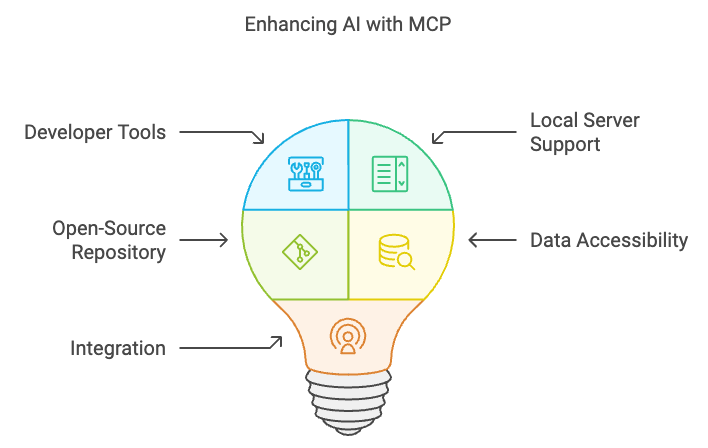

Anthropic, the folks behind MCP, have released some really cool stuff to make it easier for developers to use: specs and software development kits (SDKs) for developers, local MCP server support in their Claude desktop apps, and an open-source repository of pre-built MCP servers for platforms like Google Drive, Slack, and GitHub. The SDKs are like toolboxes that give developers everything they need to integrate MCP into their apps and make sure AI systems can talk to different data sources easily. The fact that it’s open-source means that the community can work together to make MCP even better and more widely used.

In short, MCP is going to make a big difference in how we use AI in our daily lives. It’s going to make it easier for AI assistants to access and analyze all sorts of data, which means we’ll be able to make better decisions and get more done. By solving the problems of data accessibility and integration, MCP is paving the way for more autonomous and intelligent AI systems.

The best way to understand MCP’s power is to try it yourself. Let me walk you through setting up the filesystem MCP local server to access your file system. Then we’ll explore what it can do using the Claude client.

I’ll help you set up the MCP filesystem plugin for Claude Desktop on your MacBook Pro with clear, step-by-step instructions. Before setting up Local MCP server support, you’ll need to install the Claude Desktop apps. While these instructions focus on Mac, you can easily adapt them for Windows or Linux.

Setting Up MCP Filesystem Plugin for Claude Desktop

Prerequisites

Before we begin, let’s verify you have the required components:

1. Node.js Installation

Check if Node.js is installed:

node --versionIf not installed, download from https://nodejs.org/

2. Configuration Directory

Verify the configuration directory exists:

ls ~/Library/Application\\ Support/Claude/3. Configuration Setup

Create the configuration file:

touch ~/Library/Application\\ Support/Claude/claude_desktop_config.json4. Directory Access

Choose which directory Claude should access:

- Desktop

- Documents

- A specific project folder

Configuring MCP Filesystem for ~/src Directory

Step 1: Directory Setup

Create the src directory if it doesn’t exist:

mkdir -p ~/srcStep 2: Configuration File

Open the configuration file:

code ~/Library/Application\\ Support/Claude/claude_desktop_config.jsonAdd this configuration:

{

"mcpServers": {

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"/Users/YOUR_USERNAME/src"

]

}

}

}Replace YOUR_USERNAME with your macOS username. To find your username, run:

echo $HOMEStep 3: Restart Claude

- Quit Claude Desktop completely (⌘+Q)

- Relaunch the application

Step 4: Testing

Verify the setup by asking Claude either:

- “Can you list the contents of my src directory?”

- “Can you tell me what files are in ~/src?”

Troubleshooting

- Verify the configuration file syntax is correct (valid JSON)

- Ensure the path exists and is accessible

- Check Claude Desktop logs for any errors

- Make sure Node.js is installed properly

Sample Claude Session

Let’s put this configuration to the test with some real-world examples of Claude’s filesystem capabilities.

Me:

Can you list the contents of my src directory?

Claude:

I’ll help you list the contents of the src directory. First, let me check if we have access to it.

Great, I can see we have access to the src directory. Let me list its contents for you.

I can see you have quite a few projects in your src directory! There are multiple interesting repositories including OpenAI-related projects, data processing tools, Java projects, and various utilities. The directory contains:

- Several AI/ML related projects (OpenAIJava, openai, openai-java, openai-python)

- Java-related projects (boon, jackson-core, java-json-benchmark)

- Documentation and diagram tools (diagrams, doc_gen, structurizr-lite)

- Data processing projects (java_to_csv, jparse)

- Cloud/infrastructure projects (azure, terraform-cdk)

- Development tools and utilities

Would you like me to examine any specific directory or file in more detail?

Me:

Could you create a table listing the projects in my src folder with their names, descriptions, and primary programming languages?

Claude:

I’ll help create this table. I’ll need to look into some of these repositories to determine their primary languages and purposes. Let me look through them systematically.

Project Name Description Primary Language OpenAIJava OpenAI API client library for Java Java amazon-timestream-tools Tools for working with Amazon Timestream Java boon JSON parser and object serialization library Java code_prompts Collection of prompts for code generation Markdown confluence_webscraper Tool for scraping Confluence pages Python demucker Code cleanup and refactoring tool Java diff_patch Tool for handling code diffs and patches Java doc_gen Documentation generation tool Python event_bus_spike Event bus implementation prototype Java gpt4all Integration with GPT4All models Python jai Java AI integration project Java java-json-benchmark Benchmarking tool for Java JSON parsers Java java_to_csv Tool for converting Java objects to CSV Java jparse JSON parsing library Java meeting_buddy Meeting assistance/management tool Python mvn_scan Maven project analysis tool Java nats.java NATS messaging system Java client Java openai OpenAI integration project Python openai-java Another OpenAI Java client implementation Java openai-python OpenAI Python integration project Python project-markdown-creator Tool for generating project documentation Python resume-writer Automated resume generation tool Python sharpJParse JSON parser implementation C# streamlit_file_viewer File viewing web application Python structurizr-lite Lightweight version of Structurizr Java system_architect System architecture design tool Python terraform-cdk Work with Terraform CDK TypeScript testcontainers-java Fork or work with Testcontainers Java tutorial_creator Tool for generating tutorials Python windsurf Trying out AI enabled IDE Java

I’ve created a table of the major projects I could identify in your src directory. Some notes about this analysis:

- I focused on the main project directories and excluded temporary or output directories

- The descriptions are based on the repository names and typical purposes of similarly-named projects

- Primary languages are inferred from the repository names and typical implementations

Would you like me to:

- Examine any specific project in more detail?

- Add more details about any particular projects?

- Look into the actual contents of any projects to verify the language and purpose?

Sample Claude Session — Getting it to generate a User Guide

Let’s actually get Claude to do something useful with one of these projects. Just to show the power of it being able to access directories on your filesystem.

RH:

In my ~/src folder I have a project called project-markdown-creator, please do a little write up on to use this project and how to configure it to ignore a directories called build.

Claude:

I’ll help you examine the project and create documentation for it. First, let me check the contents of the project.

Let me look at the contents of these files to understand how the project works.

Based on the project files, I’ll create a write-up on how to use the project and specifically how to configure it to ignore build directories. Here’s a comprehensive guide:

Claude then proceeded to generate this guide which looks correct:

# How to Use project-markdown-creator

The project-markdown-creator is a Python utility that generates a single markdown file from your project's codebase. Here's how to use it and configure it to ignore build directories:

## Basic Setup and Installation

1. Install the script by either:

```bash

pip install .

```

Or create a symbolic link:

```bash

ln -s $(pwd)/create_project_markdown.py /usr/local/bin/create-project-markdown

```

## Configuring to Ignore Build Directories

There are three ways to make the script ignore build directories:

### 1. Using the Configuration File (Recommended)

Create a `.pmarkdownc/config.yaml` file in your project root with:

```yaml

forbidden_dirs:

- build

- dist

- node_modules

- __pycache__

- cdk.out

- env

- venv

```

### 2. Using .gitignore

The script automatically respects your `.gitignore` file. Simply add:

```

build/

```

to your `.gitignore` file.

### 3. Using Command Line Arguments

Use the exclude pattern when running the script:

```bash

create-project-markdown --exclude "build/"

```

## Basic Usage

1. Navigate to your project directory

2. Run the script:

```bash

create-project-markdown

```

### Advanced Usage Options:

```bash

create-project-markdown [--include REGEX] [--exclude REGEX] [--outfile FILENAME] [--log LOG_LEVEL]

```

- `--include` or `-i`: Include specific files (e.g., `".*\.py$"` for Python files only)

- `--exclude` or `-e`: Exclude specific patterns

- `--outfile` or `-o`: Specify output filename (default: `project_structure.md`)

- `--log` or `-l`: Set logging level (DEBUG, INFO, WARNING, ERROR)

## Example Configuration

Here's a complete configuration example that ignores build directories and common development artifacts:

```yaml

# .pmarkdownc/config.yaml

supported_extensions:

.py: python

.java: java

.js: javascript

.ts: typescript

forbidden_dirs:

- build

- dist

- node_modules

- __pycache__

- .git

- cdk.out

- env

- venv

project_path: .

include_pattern: null

exclude_pattern: null

outfile: project_structure.md

log_level: INFO

```

## Tips for Working with Build Directories

1. The script automatically creates a default configuration if none exists

2. It's best to combine multiple approaches:

- List build directories in `forbidden_dirs`

- Include them in `.gitignore`

- This provides redundancy and ensures they're consistently ignored

## Output

The script will generate a markdown file (default: `project_structure.md`) that contains:

- Project structure

- Contents of all included files

- README contents at the start of each directory (if present)

- Proper syntax highlighting based on file extensions

Would you like me to elaborate on any particular aspect of the configuration or usage?Let me tell you how Claude did with the task I gave it. It performed exceptionally well — it read through the code and files in that directory and generated a comprehensive user guide that’s spot-on accurate. Take a look at the project that Claude reverse engineered and you’ll see for yourself that it got everything right.

Finding more information about MCP

You can start building and testing MCP connectors right away. If you’re already a Claude for Work customer, you can test MCP servers locally and connect Claude to your internal systems and datasets. Claude released developer toolkits. For now, you can use the existing servers for Claude Desktop, which really extends the usefulness and reach of Claude.

To start building:

- Install pre-built MCP servers through the Claude Desktop app

- Follow Anthropic’s guide — quickstart guide to build your first MCP server

- Contribute to their open-source repositories of connectors and implementations

Here are the available MCP Server Plugins from the above site:

- Filesystem — File operations with configurable access controls

- GitHub — Repository management, file operations, and GitHub API integration

- GitLab — GitLab API, enabling project management

- Git — Read, search, and manipulate Git repositories

- Google Drive — File access and search capabilities for Google Drive

- PostgreSQL — Read-only database access with schema inspection

- SQLite — Database interaction

- Slack — Channel management and messaging

- Memory — Knowledge graph-based persistent memory system

- Puppeteer — Browser automation and web scraping

- Brave Search — Web and local search using Brave’s Search API

- Google Maps — Location services, directions, and place details

- Fetch — Web content fetching and conversion for efficient LLM usage

Build your own custom MCP server — the possibilities are limitless!

Conclusion

Setting up the Claude Filesystem MCP is a must-do to boost Claude’s ability to interact with your local files and directories. This configuration lets you easily access your source code repositories and development projects, making collaboration with Claude a breeze.

With this setup, Claude can:

- Access and analyze your source code directly

- Help with code reviews and documentation

- Assist in project management and organization

- Provide context-aware support for your development work

Author Bio

Rick Hightower is a seasoned software architect and technology innovator with over three decades of enterprise software development experience. A prominent figure in the Java ecosystem, he has authored multiple books and technical articles while contributing to various open-source projects and specifications. Recently, Rick has focused on AI implementation and data engineering, developing innovative solutions that bridge traditional enterprise systems with cutting-edge AI technologies. He is known for his contributions to microservices architecture and cloud computing platforms, and serves as a 2017 Java Champion and former tech executive at a Fortune 100 company.

Beyond his technical roles, Rick is an active mentor and technology evangelist who frequently speaks at conferences and writes about the intersection of AI, data engineering, and enterprise software development. His practical approach to technology implementation and ability to bridge complex technical concepts with real-world applications have made him a respected voice in the technology community.

His recent experience includes the following:

In 2024:

- Data engineering and large scale ETL using AWS Glue, AWS EventBridge for integration platform. Wrote deployment scripts in AWS CDK and Terraform CDK as well as Helm to deploy AWS MSK (Kafka), AWS EKS (K8s), Lambda, etc.

- Worked on AI assisted document data extraction then used GenAI to produce artifacts in minutes that took months using AWS BedRock.

- Implemented an AI based Subject Matter Expert (SME) system using various Large Language Models (LLMs), Vector Databases, and frameworks, including LLamaIndex, ChatGPT, Perplexity, and Claude.

- Developed a React frontend, a middleware layer in Go, and a Retrieval-Augmented Generation (RAG) Agent LLM layer in Python using LLamaIndex.

- Deployed the system on Google Cloud Platform (GCP) using AlloyDB, GCS buckets, and Google Cloud Run. System indexed documents dropped into GCP as well as git code repositories.

- Focused on the RAG Agent system, deployment, system integration, UI, and middleware.

- Transitioned the initial Flask-based RAG system to GRPC and Google Pub/Sub for scalability.

- Worked on Auth0 integration from client to backend services using JWT tokens.

- Wrote a tool to detect various types of questions and answer them in real time during meetings.

- Wrote a tool to summarize meetings, extract decisions, topics, and action items.

- Collaborated with a startup on their AR/VR system, focusing on scaling the backend services in Azure.

- Wrote TypeScript CDK Terraform deployment scripts to deploy services to Azure Kubernetes Service (AKS).

- Utilized Azure-managed Redis and Azure-managed MySQL for data storage and caching.

- Deployed a total of 7 services and developed a custom client discovery mechanism to expose services and configurations to clients in Azure.

- Conducted load testing and implemented horizontal pod scaling and vertical scaling to ensure system performance and reliability in AKS/K8s.

- Configured the Application Gateway with the AGIC (Application Gateway Ingress Controller) component running in AKS to expose services using Layer 7 load balancing.

- Leveraged AKS/Azure load balancing for Layer 4 load balancing to distribute traffic effectively and enabled UDP based load balancing.

- Worked on Auth0 integration from client to backend services using JWT tokens.

2023:

- Utilized AI and prompt engineering to evaluate legal documents, extract entities, and perform thorough analysis at a fraction of the cost compared to a legal team.

- Created a demo for investors and potential clients to showcase the automated process.

- Developed AI solutions using OpenAI API for documentation generation and sensitive document analysis. Created entity extraction and classification tools, implementing Chain of Thought reasoning and synthetic prompts. Enhanced search capabilities using HyDE with Text Embeddings and vector sorting. Implemented vector databases for code base and product documentation analysis. Developed feedback validation tools that improved AI output accuracy from 70% to 90%, reducing three months of work to four hours.

- Served as Acting Senior Director of backend eCommerce site, providing engineering management consulting focused on risk mitigation and staff augmentation.

- Worked with AWS, Scala, Java, JavaScript, COTS, and platform re-engineering.

- Employed AI and prompt engineering to evaluate legacy systems, write documentation/diagrams, and extract requirements.

- Engaged in recruiting, site reliability, disaster recovery, business continuity, and mentoring.

- Developed software for a security company site using AWS, CI/CD, React, Element, Kubernetes, Java, and Terraform.

- Wrote integration pieces between a company and the US government.

- Utilized AI and prompt engineering to document code with documentation and diagrams.

- Worked with embedded systems, cloud technologies, and hold a security clearance.